My Website Got 400K Unique Visits in 2 Weeks — And It Wasn’t an Attack

Author

Erdi KöseHere’s a quick story — and hopefully a useful one — for anyone running their own website.

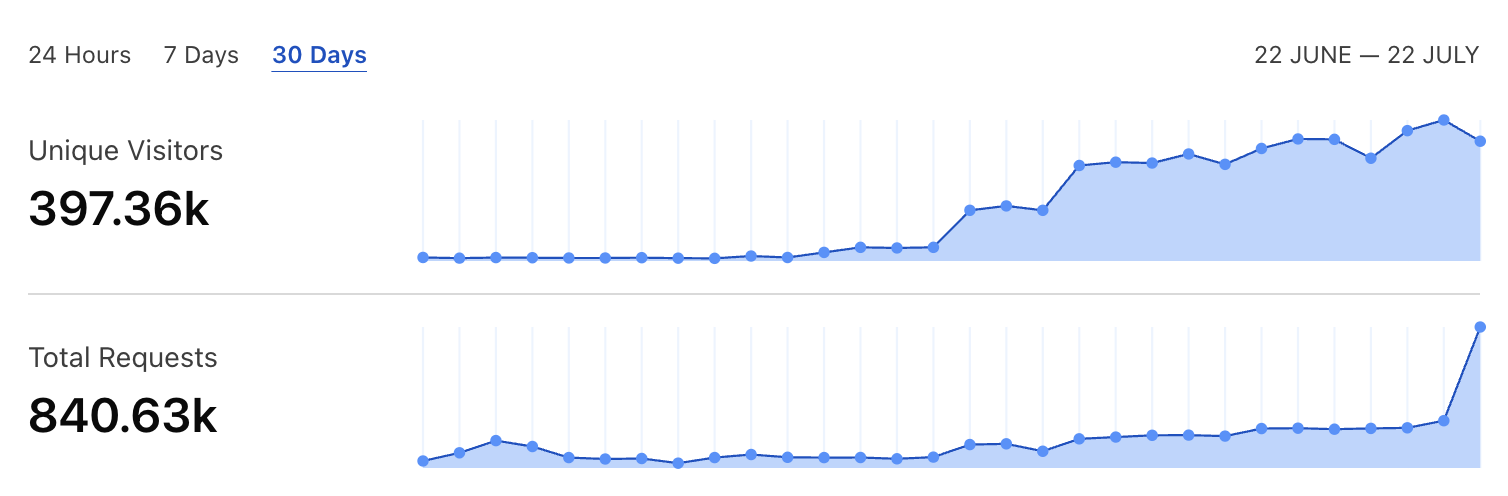

A few days ago, I checked my Cloudflare dashboard and noticed something odd.

My site had received over 222,000 unique visitors in just 7 days, and around 400,000 in the last 2 weeks.

I hadn’t launched anything, done any marketing, or posted anything new.

So this sudden spike felt suspicious.

“Is this an attack? Is someone scraping everything? Will this blow up my Cloudflare bill?”

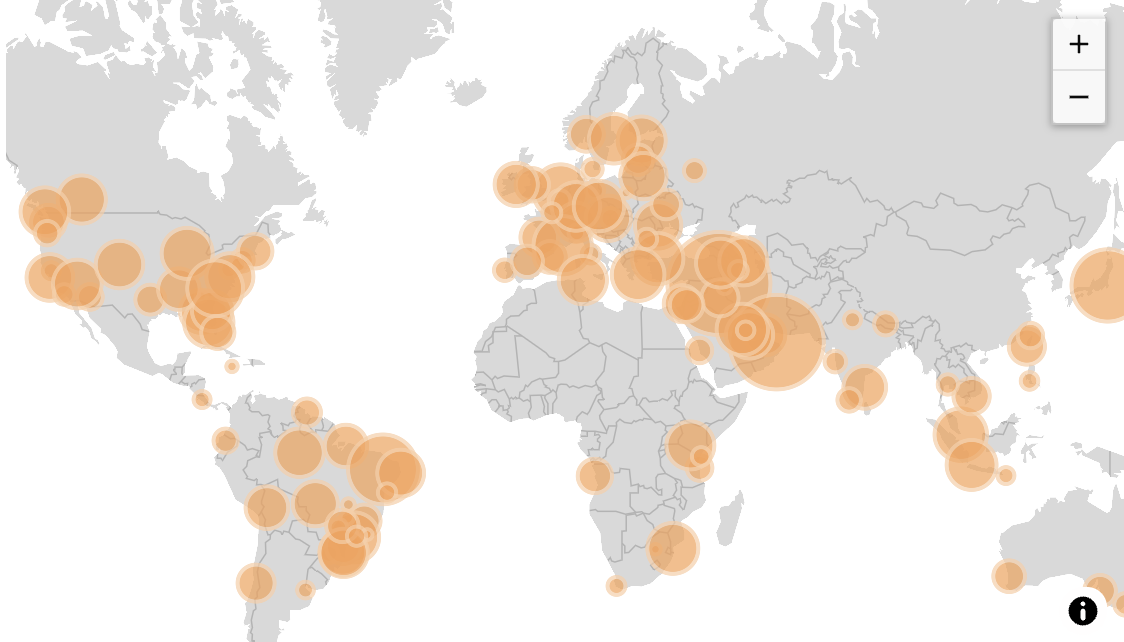

Global Traffic, No Clear Source

What made it even stranger was the traffic coming from all over the world.

The US, Europe, Asia, South America — even traffic from places like Russia and Brazil.

There was no obvious pattern. So I needed to dig deeper.

I immediately reached out to my sister, who works in cybersecurity.

She Figured It Out Fast

She checked the request logs and quickly explained the reason:

It wasn’t an attack — it was bots. Googlebot, Bingbot, Applebot, and a few others.

And then it hit me:

Two weeks ago, I had deployed a broken sitemap.xml and robots.txt.

With no proper crawl instructions, the bots started crawling everything they could find — every route, every asset.

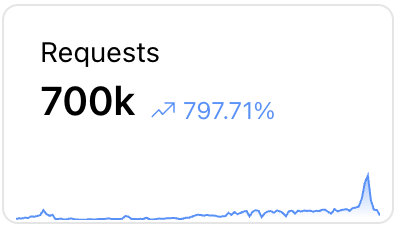

I Fixed It Yesterday — Traffic Dropped

Once I fixed the SEO files, the traffic began to drop almost immediately.

The bots finally found what they were supposed to find — and stopped flooding the site.

It Did Add to the Bill

All that bot activity added about $5 to my Cloudflare usage this month.

Not a huge deal, but definitely unexpected — especially for non-human traffic.

And Now My Security Rules Rock

After the fix, my sister also helped me improve my Cloudflare security setup.

She added proper rate limiting, smarter bot filtering, and tweaked caching rules — all simple but powerful changes.

Let’s just say things are way more solid now. I probably wouldn’t have thought of half of them on my own.

What I Learned

- Not every traffic spike is an attack — but don’t ignore it either.

- If your sitemap or robots.txt is broken, bots will crawl everything.

- Cloudflare’s analytics and logs are super helpful when something feels off.

- Even good bots can increase costs if left unchecked.

- Small changes to your infrastructure can make a big difference.

- Having someone around who knows how to read a firewall log correctly… doesn’t hurt.

If you're using Next.js and Cloudflare, I recommend double-checking your sitemap.xml and robots.txt setup:

👉 Next.js Metadata: sitemap and robots

Thanks for reading — and extra thanks to my sister for sorting it out and hardening everything like a pro 😄